After encountering the “RuntimeError: GPU is required to quantize or run quantize model.” while training my PyTorch model, I realized my GPU wasn’t configured correctly, hindering performance.

The “RuntimeError: GPU is required to quantize or run quantize model.” error occurs when your system lacks a compatible GPU or it is not set up properly. To fix this, ensure your GPU is installed, drivers are updated, and CUDA is configured correctly for PyTorch.

Struggling with the “RuntimeError: GPU is required to quantize or run quantize model” error? Discover simple solutions and tips to get your model running smoothly on your GPU in this article!

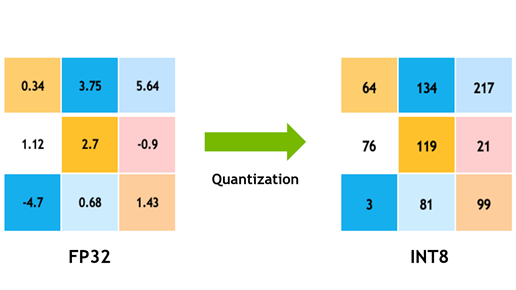

What Is Quantization?

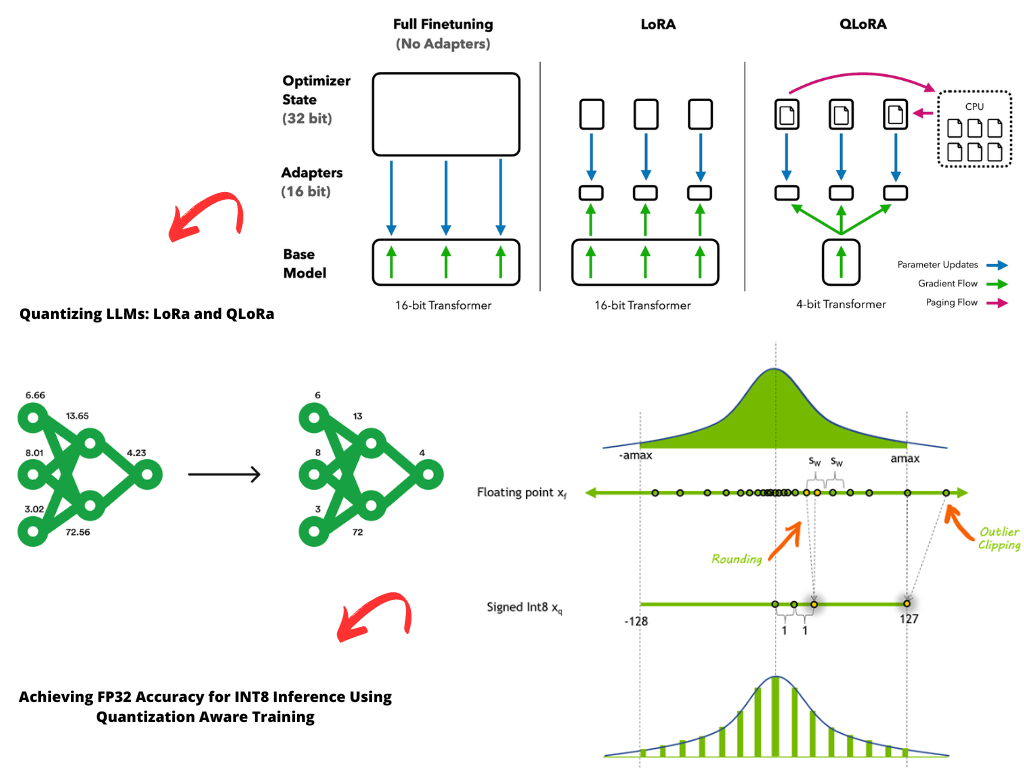

Quantization in machine learning reduces the precision of model weights and activations, typically from 32-bit floating-point (FP32) to 16-bit (FP16) or 8-bit integers (INT8). This technique improves model efficiency by decreasing size, reducing computational load, and speeding up inference, which is crucial for edge devices or low-power platforms.

What Does It Mean Runtimeerror?

In programming, a RuntimeError occurs when an error is encountered during the execution of a program. It usually indicates that something went wrong that wasn’t caught during compilation or before the program began running. In this context, the error means that a specific requirement for running quantization has not been met—specifically, the need for a GPU.

Also Read: NVIDIA Overlay Says GPU VRAM Clocked At 9501 MHz – Compete Guide 2024!

What Does The Error “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Mean?

This error message means that your machine learning model is attempting to perform a quantization operation (or run a previously quantized model) that requires the computational power of a GPU. If your environment is not equipped with a GPU or if the GPU isn’t correctly set up, this error will occur.

Why Is A GPU Needed For Quantizing Models?

GPUs are designed for parallel processing, which makes them highly effective for computationally intensive tasks such as deep learning and model quantization. Some types of quantization operations require hardware that can handle these parallel computations efficiently, which is why quantization often relies on a GPU.

For quantization-aware training or inference, the GPU performs faster calculations compared to the CPU, thus improving the model’s overall performance and making it feasible to handle larger datasets or real-time applications.

Common Causes Of “RuntimeError: GPU Is Required To Quantize Or Run Quantize Model”

1. No GPU Available:

The first reason for this error is that your system does not have a GPU available or it is not detected. Many machine learning frameworks, like PyTorch, require a GPU for certain operations, especially for quantization. You can check if your GPU is available using the following code:

import torch

if not torch.cuda.is_available():

print("No GPU is available. Please check your GPU setup.")

else:

print("GPU is available.")If this code prints “No GPU is available,” you may need to install the appropriate drivers or check your hardware.

2. CUDA Setup Issues:

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by NVIDIA. If CUDA is not set up correctly, your machine learning framework might not be able to utilize the GPU, leading to the runtime error.

To check if CUDA is installed and properly configured, you can run this code:

import torch

if torch.cuda.is_available():

print("CUDA is available.")

else:

print("CUDA is not available. Please install or configure CUDA correctly.")3. Incorrect Model Configuration:

Another reason for this error is that the model may not be configured to use the GPU. In PyTorch, you need to explicitly move your model and data to the GPU using .to(device). Here’s how you can do it:

import torch

import torch.nn as nn

# Define a simple model

class SimpleModel(nn.Module):

def __init__(self):

super(SimpleModel, self).__init__()

self.fc = nn.Linear(10, 2)

def forward(self, x):

return self.fc(x)

# Initialize the model

model = SimpleModel()

# Check if a GPU is available and move the model to the GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

# Create dummy input data and move it to the GPU

input_data = torch.randn(1, 10).to(device)

# Run the model

output = model(input_data)

print("Model output:", output)Fixing The CUDA Setup:

To resolve any issues with CUDA, follow these steps:

1. Check CUDA Installation:

Ensure that CUDA is installed by running:

nvcc --versionIf CUDA isn’t installed, download and install it from the Nvidia CUDA toolkit page.

2. Check GPU Compatibility:

Confirm that your GPU is supported by CUDA and ensure that your drivers are up to date.

3. Test CUDA in PyTorch:

Run the following command in PyTorch to confirm that the GPU is recognized:

import torch

print(torch.cuda.is_available())How Can I Check If I Have A GPU Available To Fix The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

To determine if your machine has a GPU available for use in PyTorch and to address the “RuntimeError: GPU is required to quantize or run quantize model” error, follow these steps:

1. Check GPU Availability in PyTorch:

You can use the following Python code snippet to check if a GPU is available:

import torch

if torch.cuda.is_available():

print("GPU is available!")

else:

print("No GPU detected.")If the output indicates “No GPU detected,” you may need to install drivers, set up CUDA, or consider switching to a system with a GPU.

2. Open a Terminal or Command Prompt

First, open a terminal (Linux/macOS) or Command Prompt (Windows). This will allow you to run commands to check for GPU availability.

Also Read: Can You Use AMD GPU With Intel CPU – Complete Guide 2024!

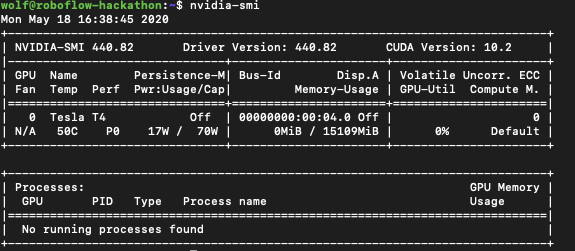

3. Check for NVIDIA GPUs

If you have an NVIDIA GPU, you can use the following command to check if it is recognized by your system:

· Windows:

nvidia-smi· Linux:

nvidia-smiThis command displays the status of your NVIDIA GPU, including its memory usage and running processes. If you see this information, your NVIDIA GPU is available.

4. Check for Other GPUs:

If you do not have an NVIDIA GPU, you can check for other types of GPUs (like AMD) using the following command:

· Windows:

wmic path win32_VideoController get name· Linux:

lspci | grep -i vgaThese commands will list all the video controllers installed on your system. If you see your GPU listed, it is available for use.

Which GPU Drivers Should I Install To Resolve “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.”?

To resolve the “RuntimeError: GPU is required to quantize or run quantize model,” you need to install the correct GPU drivers for your system. If you have an NVIDIA GPU, you should install the latest NVIDIA drivers along with CUDA (Compute Unified Device Architecture) and cuDNN (CUDA Deep Neural Network library).

These drivers allow your GPU to work efficiently with machine learning frameworks like PyTorch. For AMD GPUs, install the latest AMD Radeon drivers. Always download drivers from the official websites (NVIDIA or AMD) to ensure compatibility and stability for your system.

How Do I Set Up My Environment Variables To Fix The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

To fix the “RuntimeError: GPU is required to quantize or run quantize model” error, you need to set up your environment variables properly, especially for CUDA, which is crucial for GPU usage in machine learning models. Here’s how to do it:

First, ensure that CUDA and cuDNN (if needed) are installed. Then, set the CUDA_HOME and PATH environment variables to point to the CUDA installation. On Windows, open the System Properties > Environment Variables and add the CUDA paths to the PATH variable. On Linux/macOS, you can add the following lines to your .bashrc or .bash_profile file:

export CUDA_HOME=/usr/local/cuda

export PATH=$PATH:$CUDA_HOME/binAfter setting these, restart your terminal or computer, and ensure PyTorch or your framework recognizes the GPU by running the command to check GPU availability (e.g., torch.cuda.is_available() in PyTorch). This should resolve the error by ensuring your system knows where to find CUDA for GPU operations.

What Is Quantization In Machine Learning?

Quantization in machine learning is a technique that reduces the precision of a model’s weights and activations, typically from 32-bit to lower precision like 16-bit or 8-bit. This helps make models smaller, faster, and more efficient, especially for devices with limited resources like mobile or edge devices.

What Code Changes Are Needed To Use A GPU In Pytorch?

To use a GPU in PyTorch, you need to move your model and data to the GPU. First, check if a GPU is available using torch.cuda.is_available(). If available, you can transfer the model and data to the GPU with .to(device) where device = torch.device(“cuda”). Here’s an example:

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = MyModel().to(device)

data = data.to(device)This ensures the model and data run on the GPU for faster computations.

Also Read: Hardware Accelerated GPU Scheduling Windows 10 – Enable Now!

Can I Use Cloud Services To Resolve “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.”?

Yes, you can use cloud services to resolve the “RuntimeError: GPU is required to quantize or run quantize model” issue. Cloud platforms like AWS, Google Cloud, and Azure offer virtual machines with powerful GPUs, allowing you to run and train your models efficiently without needing a physical GPU on your local machine.

Can The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model” Error Occur In Tensorflow?

Yes, the “RuntimeError: GPU is required to quantize or run quantized model” error can occur in TensorFlow if you try to use quantization features without a compatible GPU. TensorFlow often relies on GPUs for efficient computations, especially for tasks like model quantization. If no GPU is detected, the error will be triggered, indicating that you need a GPU to proceed.

What Does Cuda_visible_devices Do?

`CUDA_VISIBLE_DEVICES` is an environment variable that controls which GPUs are visible to a program using CUDA. By setting this variable, you can specify which GPU or GPUs your code can use, making it helpful for managing resources on systems with multiple GPUs.

How Do You Reduce Quantization Error?

To reduce quantization error, you can use techniques like fine-tuning the model after quantization, applying mixed-precision quantization, or using more advanced algorithms like quantization-aware training. These methods help maintain the model’s accuracy while benefiting from lower precision.

How Can I Quantize A Model For CPU?

To quantize a model for CPU, you can convert its weights and activations from higher precision (like FP32) to lower precision formats (like INT8). This reduces the model’s size and speeds up inference. In frameworks like PyTorch, you can use built-in quantization tools to do this easily.

Can You Run A Quantized Model On GPU?

Yes, you can run a quantized model on a GPU, but it depends on the GPU’s support for lower precision operations like INT8. GPUs with specialized hardware, such as NVIDIA’s Tensor Cores, can efficiently handle quantized models. Using a supported GPU can boost performance while maintaining accuracy.

65B Quantized Model On CPU?

A 65B quantized model refers to a machine learning model with 65 billion parameters that has been quantized to use lower precision, like 8-bit integers. Running this large model on a CPU is challenging because of its size and computational demands. However, quantization reduces memory usage and speeds up processing, making it more manageable for CPUs, though it may still be slower than using a GPU.

Also Read: GPU Power Consumption Drops – Solve Power Drops Today!

Configuring Your Model for GPU Usage

To configure your model for GPU usage, ensure that the model and data are moved to the GPU using commands like `model.to(device)` and `data.to(device)`, where `device` is typically set to `cuda` for GPU. This allows faster processing by utilizing the GPU’s computational power.

Alternative Solutions: Running Quantized Models on CPU

Running quantized models on a CPU is an alternative when a GPU isn’t available. Quantization reduces model size and processing requirements, allowing the CPU to handle computations more efficiently. This can still provide reasonable performance, especially for less resource-intensive tasks.

GPU Is Needed For Quantization In M2 MacOS · Issue #23970

On M2 MacOS, quantization requires a GPU to run efficiently, as GPUs are better suited for handling the lower precision calculations involved in the process. Without GPU support, the system may face performance issues or errors during quantization tasks. This ensures faster and more efficient model processing on the M2 Mac.

Running Pytorch Quantized Model On CUDA GPU

Running a PyTorch quantized model on a CUDA GPU involves converting the model to use lower-precision data types, like INT8, while utilizing the GPU for faster computations. This boosts performance by reducing memory usage and speeding up inference without sacrificing much accuracy. It’s especially useful for deploying models on devices with limited resources.

Also Read: Red Light On GPU When Pc Is Off – Don’t Panic, Check This Now

FAQs:

1. What Is The Purpose Of Quantization In Machine Learning Models?

Quantization helps make machine learning models smaller and faster by using fewer bits to store data, making them more efficient for devices with less processing power.

2. Can I Use A CPU Instead Of A GPU To Run A Quantized Model?

Yes, you can run quantized models on a CPU, but they will likely run slower compared to using a GPU. GPUs are designed for faster computation, especially for deep learning tasks.

3. Do I Need To Install Both CUDA And cuDNN To Fix This Error?

Yes, CUDA and cuDNN work together to enable GPU acceleration. Installing both is important for running deep learning models that require a GPU.

4. What Happens If My GPU Doesn’t Have Enough Memory For The Quantized Model?

If your GPU doesn’t have enough memory, you may encounter errors during training or inference. You can try lowering the model size or using a more powerful GPU.

5. Can I Use Older GPUs For Quantizing Models?

Older GPUs may work, but they may not support the latest versions of CUDA and may perform slower or not work efficiently for quantization tasks.

6. How Can I Test If My GPU Is Properly Working For Quantization?

You can test your GPU by running a simple PyTorch or TensorFlow code that checks if the GPU is available. If the test passes, your GPU is ready for quantization.

7. Can Quantization Reduce The Accuracy Of My Model?

Yes, quantization can slightly reduce the accuracy of your model because it lowers the precision of data, but the performance gain often outweighs the accuracy loss.

8. Can Quantization Be Applied To All Types Of Neural Networks?

Quantization can be applied to most types of neural networks, but some specific models or layers may require adjustments to support quantization effectively.

9. Is Quantization Necessary For All Machine Learning Tasks?

No, quantization is not necessary for every task. It is mainly used when you need to improve performance and reduce model size, especially for mobile or edge devices.

10. Will Updating My GPU Drivers Help Fix This Error?

Yes, updating your GPU drivers ensures that your GPU is compatible with the latest deep learning libraries, which can help resolve errors like this one.

Final Words:

In summary, encountering the “RuntimeError: GPU is required to quantize or run quantize model” means your GPU is not properly set up or available. Make sure your GPU is correctly installed, drivers are updated, and CUDA is configured in your environment. If you don’t have a GPU, consider using cloud services or running models on the CPU. By addressing these issues, you can successfully utilize quantization and leverage your model’s full potential!

Related Posts:

- Hardware Accelerated GPU Scheduling Windows 10 – Enable Now!

- NVIDIA Overlay Says GPU VRAM Clocked At 9501 MHz – Compete Guide 2024!

- GPU Power Consumption Drops – Solve Power Drops Today!

- Red Light On GPU When Pc Is Off – Don’t Panic, Check This Now